FEDS Notes

January 02, 2024

Question design and the gender gap in financial literacy

Anna Tranfaglia, Alicia Lloro, and Ellen Merry

Many surveys have measured people's financial literacy with a standard set of questions covering interest, inflation, and investment diversification. Results from these surveys have consistently shown that women are less likely than men to answer the financial literacy questions correctly – the so-called financial literacy gender gap.

These standard financial literacy questions are typically administered with an explicit "don't know" answer choice. Women are much more likely than men to select "don't know," and this fact largely drives the financial literacy gender gap; men and women generally select incorrect responses at similar rates. If women tend to select "don't know" for reasons unrelated to financial literacy, such as confidence, then the observed financial literacy gender gap may reflect both differences in knowledge as well as other factors. One reason this gender gap matters is that financial literacy is predictive of many financial behaviors like saving for retirement, having three months of liquid savings, and holding stocks and bonds (Bhutta, Blair, and Dettling, 2021; Hogarth and Hilbert, 2002; Lusardi and Mitchell, 2011; Board of Governors of the Federal Reserve System, 2022).

In this note, we leverage a survey experiment in the 2021 Survey of Household Economics and Decisionmaking (SHED) to explore the extent to which women's greater propensity to respond "don't know" contributes to the gender gap in financial literacy. We find that the gender gap in financial literacy shrinks when the "don't know" option is removed. Constructing a counterfactual of what we might expect if people who answered "don't know" guessed the answer to the question instead, we find that for both men and women, removing the "don't know" option increases the share of correct responses beyond what we predict would occur if people were simply guessing. Additionally, removing the "don't know" option increases the share correct by more for women than for men and shrinks the observed financial literacy gap.

SHED Experiment

Since 2013, the SHED has been conducted annually each fall to measure the economic well-being of U.S. households. The 2021 SHED had over 11,000 respondents and included the following three standard financial literacy questions from Lusardi and Mitchell (2008) that have been included on numerous other surveys to measure financial literacy (correct answer in bold):

- (Interest) Suppose you had $100 in a savings account and the interest rate was 2% per year. After 5 years, how much do you think you would have in the account if you left the money to grow?

Answers: More than $102, Exactly $102, Less than $102, [Don't know] - (Inflation) Imagine that the interest rate on your savings account was 1% per year and inflation was 2% per year. After 1 year, how much would you be able to buy with the money in this account?

Answers: More than today, Exactly the same, Less than today, [Don't know] - (Diversification) Do you think the following statement is true or false? "Buying a single company's stock usually provides a safer return than a stock market mutual fund."

Answers: True, False, [Don't know]

Typically, the questions are administered with an explicit "don't know" response included in the set of possible answers. In the 2021 SHED, however, whether a respondent received a "don't know" answer choice for the three financial literacy questions was randomly determined. As a result, approximately half of the respondents in the 2021 SHED (n = 5,925) received the three financial literacy questions with an explicit "don't know" response option, and the remaining respondents (n = 5,949) received the questions without the "don't know" option.

This experiment builds on previous work from Bucher-Koenen et al. (2021) who examined a similar experiment conducted on the De Nederlandsche Bank Household Survey (DHS) – a panel survey of the Dutch central bank that is representative of the Dutch speaking population in the Netherlands. However, these experiments differ in that our experiment gives the two versions of the questions (with and without "don't know") to two different groups of respondents on the same survey, while their experiment gives the two versions of the questions to the same group of respondents twice, 6 weeks apart.

Baseline Results

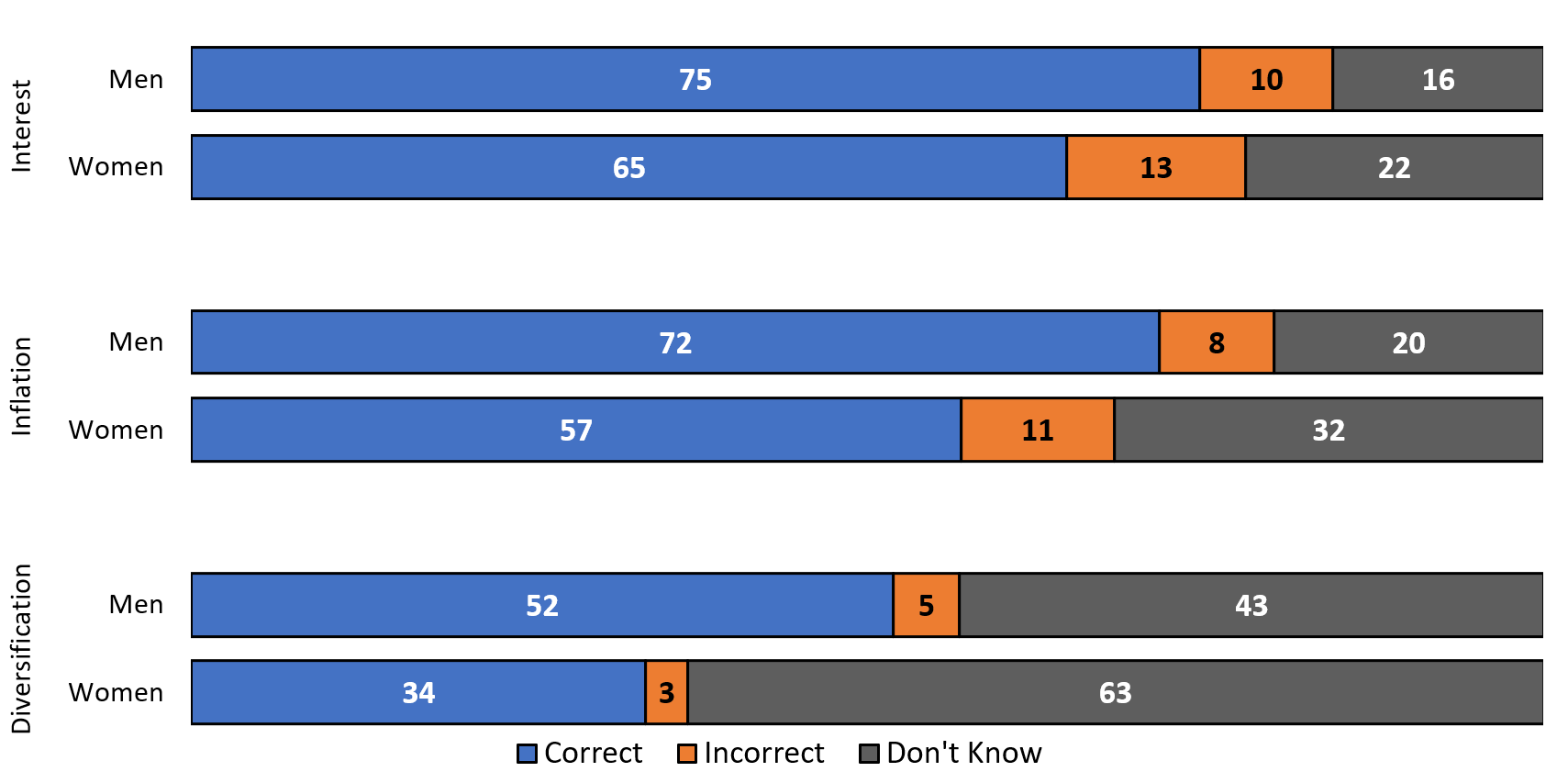

Results from the 2021 SHED show substantial gender differences in the responses to the financial literacy questions, consistent with prior studies (Lusardi and Mitchell, 2008; Lusardi and Mitchell, 2014; Bucher-Koenen et al., 2021). We first present results for the half of respondents who received an explicit "don't know" answer choice (figure 1). A smaller share of women answered each financial literacy question correctly. While women and men were similarly likely to select an incorrect answer, women were much more likely to select "don't know." For example, 63 percent of women selected "don't know" for the diversification question – 20 percentage points higher than the share of men who did so.

Source: Authors' calculations among the one-half of the 2021 SHED respondents who were asked the questions including "don't know" as a possible response. Key identifies bars in order of left to right.

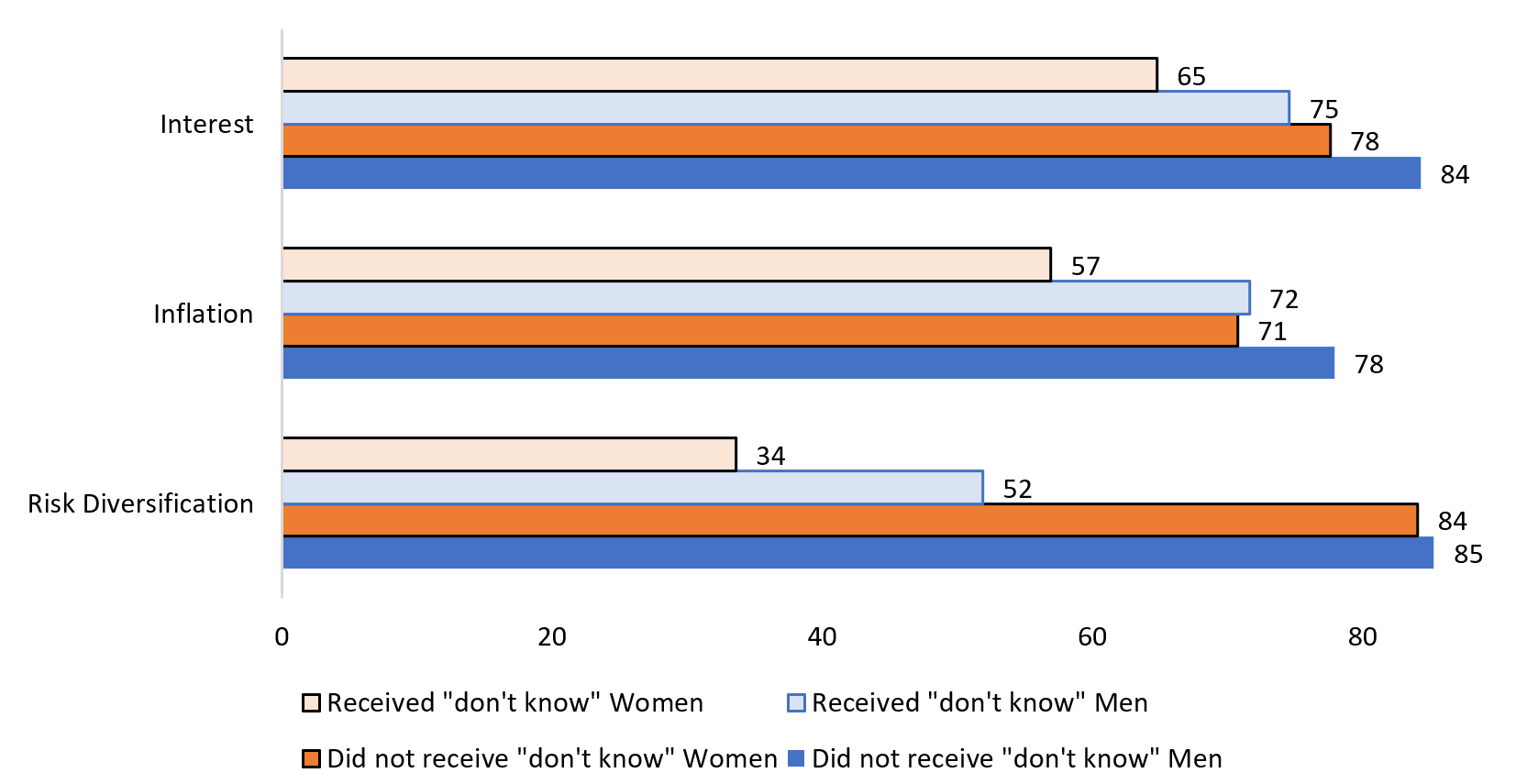

Next, we examine the half of respondents who did not receive an explicit "don't know." Because these respondents could not opt out of the questions by selecting "don't know", higher shares answered correctly (and incorrectly), compared with the half who received the explicit "don't know". Respondents could also refuse to answer the question by not selecting any answer choice. A higher share of adults refused to answer the financial literacy questions when the "don't know" option is removed, although question refusals remained low.

Looking at gender differences, we find that women were still less likely than men to answer correctly. However, these differences were smaller than what we saw among the group that received the explicit "don't know" (figure 2). In fact, for the diversification question, roughly the same share of women and men answered correctly (84 and 85 percent, respectively), resulting in a statistically insignificant 1 percentage point gender gap.

Source: Authors' calculations using the 2021 SHED sample. Key identifies bars in order from top to bottom.

Because women are more likely than men to answer "don't know" when that option is present, women have more "room for improvement" in increasing their share of correct responses when "don't know" is removed. To account for this, another way to compare gender differences in responses is to look at the share of "don't know" responses that convert to correct responses when the "don't know" choice is removed. Table 1 shows the increase in the share who answer correctly when the explicit "don't know" response option is missing, expressed as a share of those who answered "don't know" to the original survey question. This ratio is similar for men and women for the interest and diversification questions. Approximately 3 out of 5 of the "don't know" responses in the original interest question convert to correct answers (among both men and women). A larger share of the "don't know" answer share in the diversification question convert to correct. However, since this question only has two answer choices one may expect that a larger share of "don't know" responses would convert.

Only for the inflation question do we see notably different conversion rates by gender. A larger share of "don't know" responses for women convert to correct answers for the version without the explicit "don't know" option, compared to men.

Table 1. Share of “Don’t Know” answers that converted to correct answers, by gender

| Male | Female | |

|---|---|---|

| Interest | 62.2 | 58.0 |

| Inflation | 31.5 | 43.5 |

| Diversification | 77.3 | 79.7 |

Source: Authors’ calculations using 2021 SHED sample.

The removal of the "don't know" option also affected the share of adults who answered all three financial literacy questions correctly. Individuals who received the version of the questions without the explicit "don't know" option were more likely to answer them all correctly (table 2). Based on the share who answered all three financial literacy questions correctly, the gender difference in financial literacy was smaller based on the questions without "don't know," but remained significant.

Table 2. Share answering all three financial literacy questions correctly, by question format and gender

| Male | Female | Difference (Male – Female) | |

|---|---|---|---|

| Original wording (includes "don't know") | 43.9 | 23.7 | 20.2 |

| Modified wording (excludes "don’t' know") | 63.1 | 50.8 | 12.4 |

Source: Authors’ calculations using 2021 SHED sample.

Decomposing the changes from removing the "don't know" response

The higher share of correct responses among those who didn't have the option to select "don't know" may reflect two mechanisms. First, some individuals who select "don't know" when presented with that answer choice truly do not know the answer to the question but correctly guess the answer when the "don't know"' choice is absent.

Second, other individuals have at least some information about what the correct answer is but select "don't know" when it is an available option. This behavior could reflect a lack of confidence, comfort, or motivation to give an answer. Krosnick and Plesser (2010) observed that while offering a "don't know" option can encourage people who lack information on a topic to admit it, it may also discourage people who do have some relevant information from making the effort to answer the question. In the context of financial literacy, Bucher-Koenen et al. (2021) look specifically at these financial literacy questions and the role of confidence, finding that women are less likely to be confident in their answers than men, even among those who answered correctly.

The 2021 SHED did not include a general measure of confidence regarding financial literacy answers, but it did include a question asking non-retirees who had self-directed retirement savings how comfortable they were with making their own investment decisions in their retirement accounts. Non-retirees who had less comfort in making decisions with their investments were more likely to answer "don't know" to the financial literacy questions. Compared to men, women were less likely to be comfortable making investment decisions, so this lower level of comfort with financial decisions and topics could contribute to women answering "don't know" at higher rates. Nonetheless, when controlling for both gender and comfort investing, women are still more likely than men to answer "don't know" to the financial literacy questions.

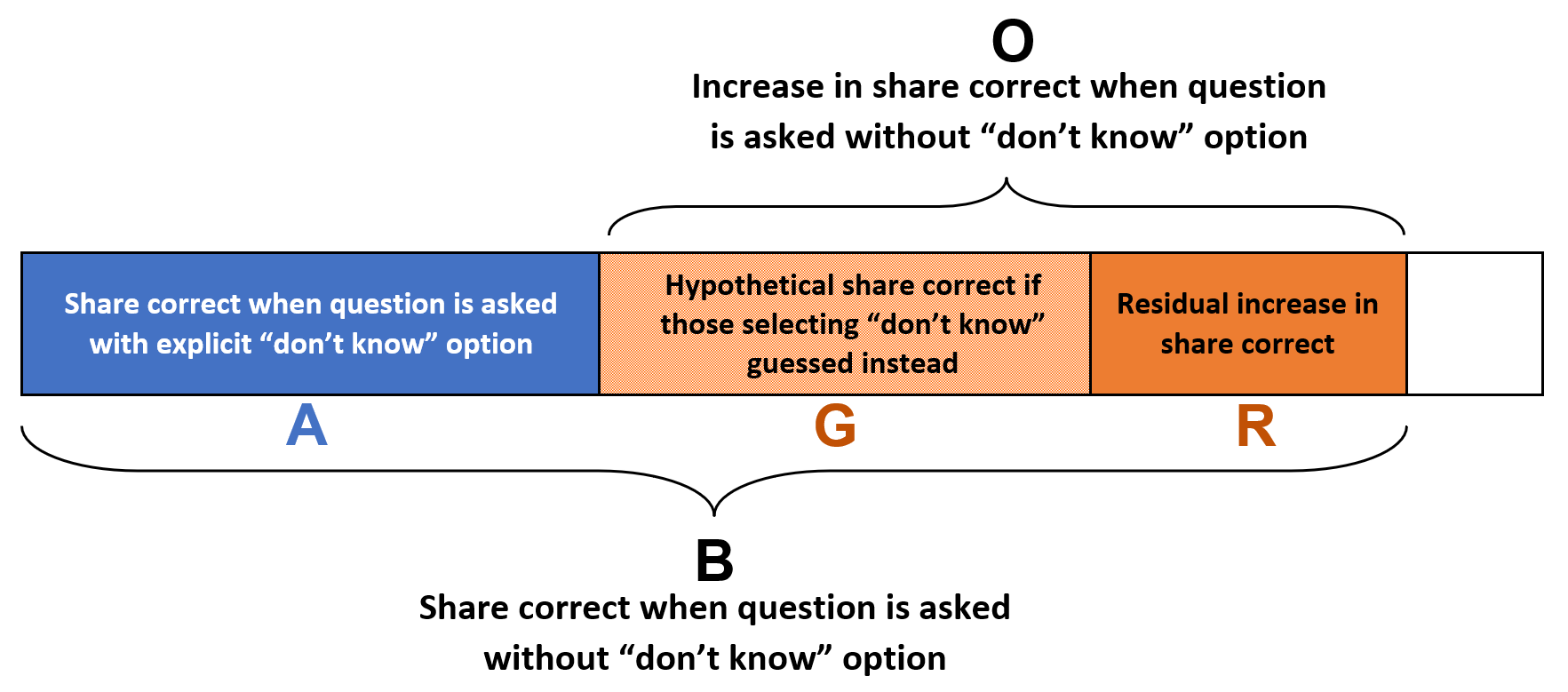

To decompose the overall increase in the share who answered correctly, denoted by O, into the component due to guessing, G, and the residual increase, R, we start with the sample that received "don't know" as an explicit answer option. For each respondent who selected "don't know," we randomly assign, with equal probability, one of the answer choices in place of the "don't know." The estimated share correct among these imputed observations then gives us a counterfactual that represents what we would expect to see if, when respondents no longer have the option to select "don't know," they guess an answer completely at random. This share correct from guessing, G, is represented by the lighter orange bar on figure 3.

Next, we compute the residual increase in the share correct, R, represented by the darker orange bar on figure 3, as follows:

$$ R=B-(A+G) $$

That is, we subtract the share correct when the question is asked with an explicit "don't know" option, A, and the share correct from guessing, G, from the share correct when the question was asked without "don't know" as an answer option, B.

The true increase in the share correct that represents knowledge, rather than guessing, likely lies somewhere between R and O. R accounts for the increase net of what we would expect if everyone were guessing. However, the true share of guessers is unknown but must be less than 1. Thus, R represents a lower bound on the increase in the share correct reflecting knowledge. Alternatively, O, which assumes that every don't know that converted to a correct answer reflects knowledge, represents an upper bound.

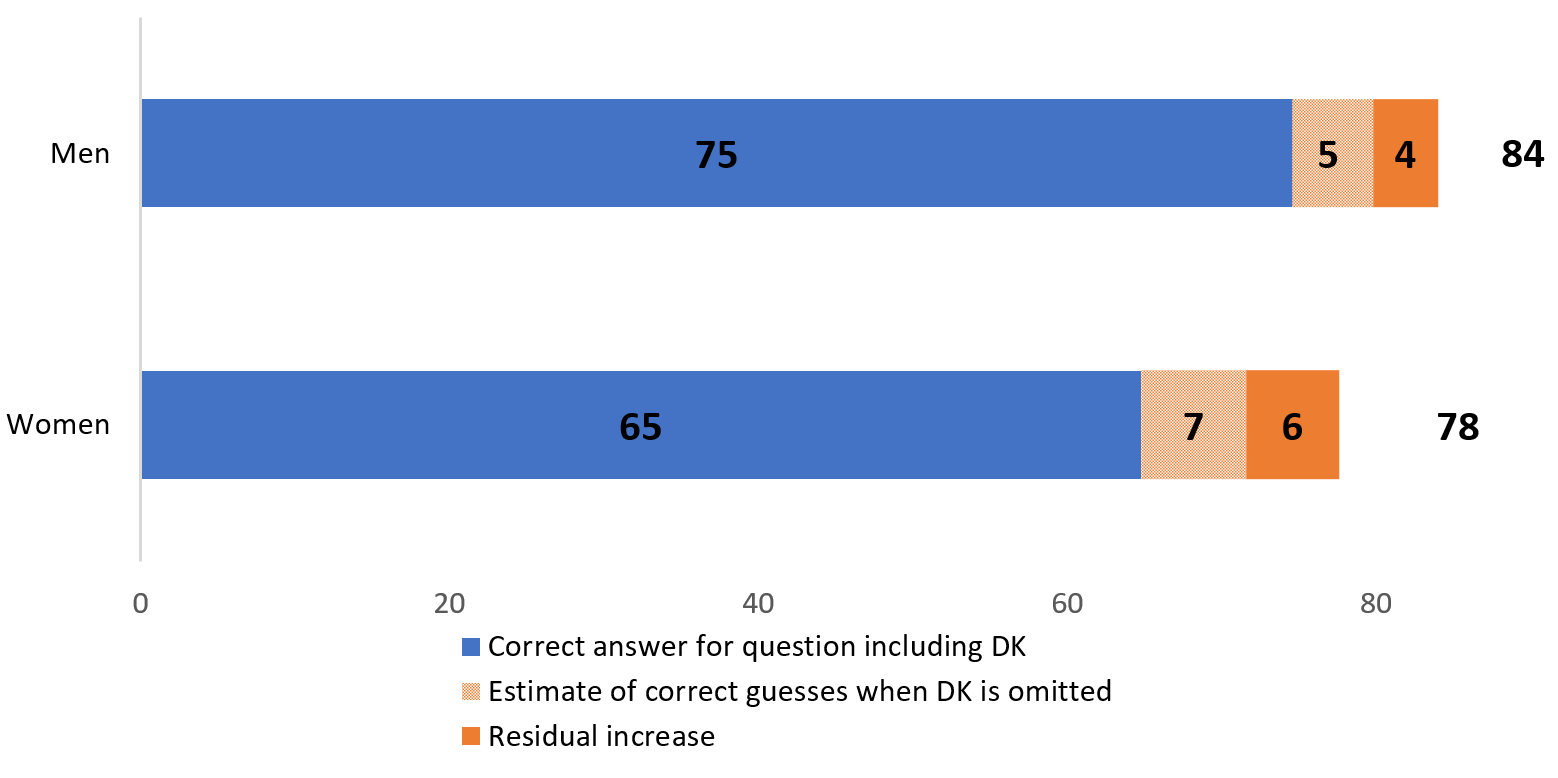

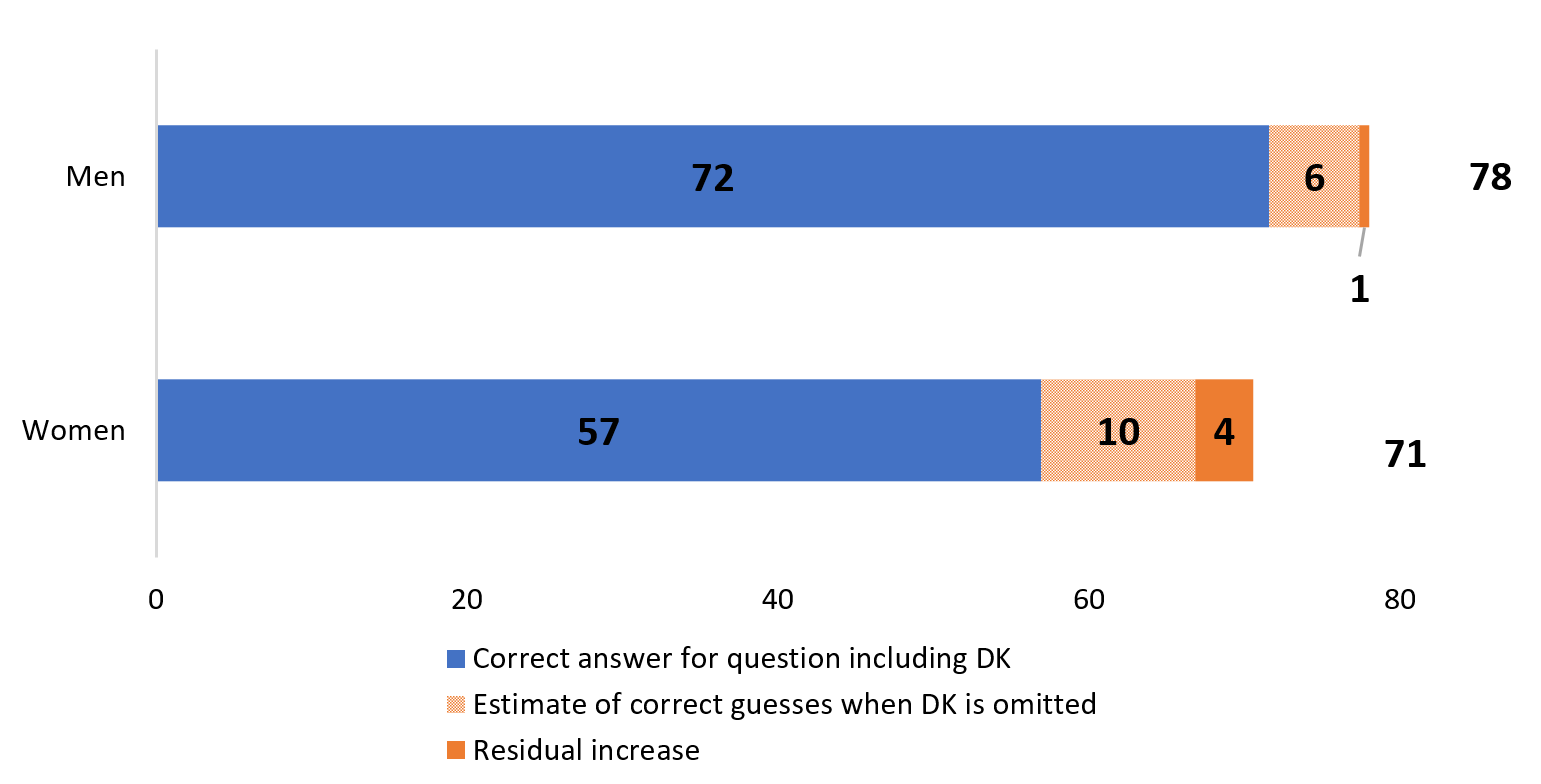

Figure 4 displays the components of our decomposition exercise for the three financial literacy questions. For the interest rate question (Panel A), 75 percent of men answered correctly when given the option to choose "don't know," and 16 percent chose "don't know." If those who said "don't know" truly had no knowledge to help answer the question, when removing the don't know option we would expect one-third of those who said "don't know" to guess correctly and two-thirds to guess incorrectly – resulting in a total of 80 percent providing the right answer. Instead, we find that 84 percent answered correctly.

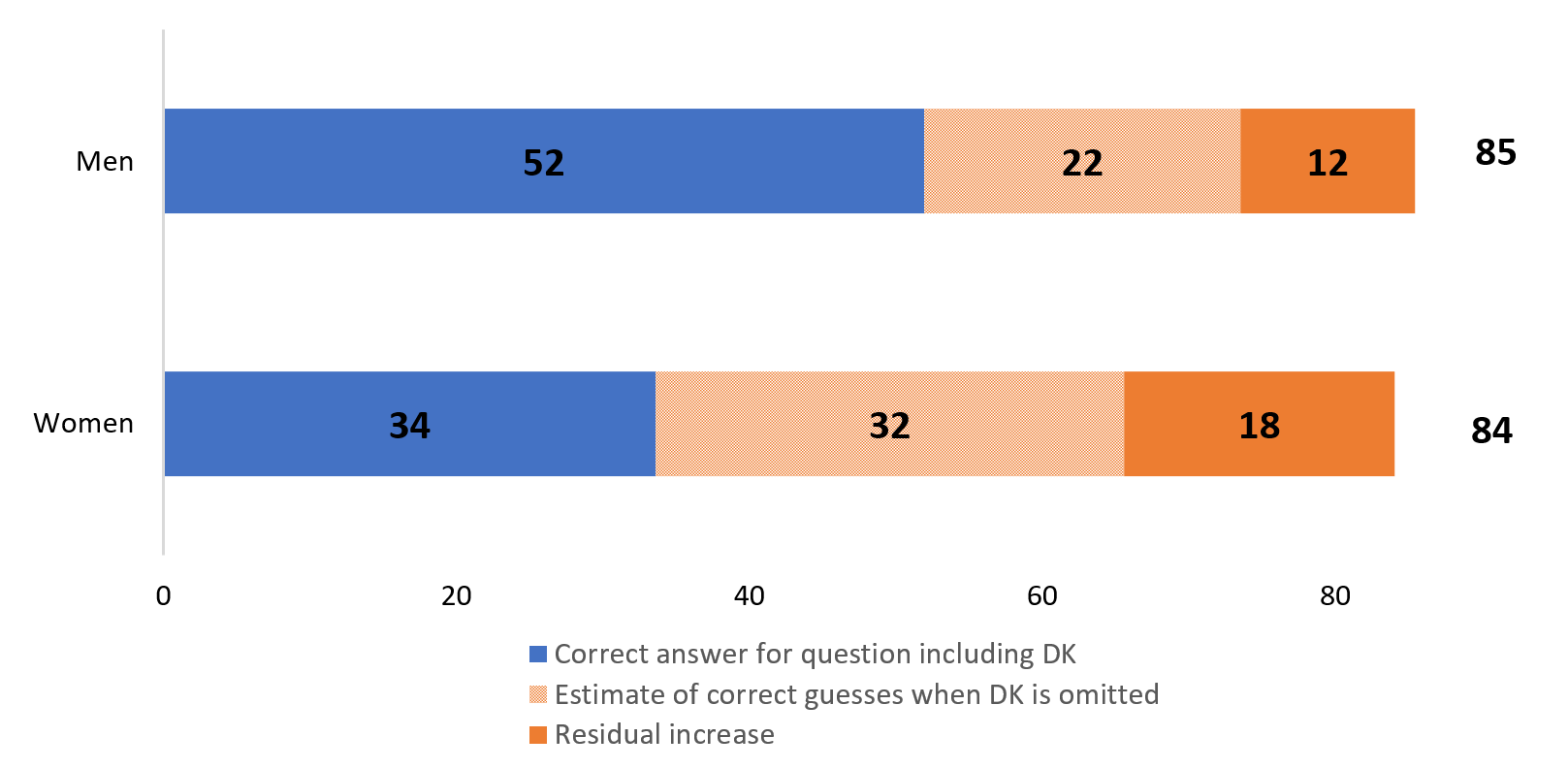

However, for the inflation question (Panel B), the increase in the share of men who answered the question correctly is only slightly greater than the imputed estimate. We interpret this as nearly all of the increase in correct responses by men on this question is due to guessing. For women, the increase in the share answering the question correctly (14 percent) is larger than the increase expected from guessing alone (10 percent).

For the risk diversification question (Panel C), both men and women experienced increases in correct responses beyond what was expected for guessing. While removing the "don't know" option reduces the gender disparity for all questions, only for the diversification question (Panel C) is the residual increase (not due to guessing) significantly larger for women.

Figure 4. Decomposing increase in 'Correct' share into increase due to guessing and residual increase

Panel A. Interest Rate Question

Panel B. Inflation Question

Panel C. Risk Diversification Question

Source: Authors' calculations using 2021 SHED sample. Key identifies bars in order from left to right. Bar sections may not sum to overall totals due to rounding.

Because women have a higher share of "don't know" responses that could convert to correct responses, we might expect that the residual increase could be larger for women than for men. As a helpful check, we also look at this residual increase as a share of the overall increase in correct responses when the "don't know" option is removed. Table 3 shows this ratio, which is corresponds to R/O in Figure 3. Consistent with the ratios in Table 1, men and women had similar rates of gains due to additional knowledge for the Interest and Diversification questions. However, women saw a larger increase of correct responses due to additional knowledge for the Inflation question. For both men and women, the share of the increase that can be attributed to additional knowledge was smaller for the Inflation question than for the other questions.

Table 3. Share of overall increase that is additional knowledge

| Male | Female | |

|---|---|---|

| Interest | 53.7% | 53.1% |

| Inflation | 10.1% | 27.0% |

| Diversification | 35.6% | 36.7% |

Source: Authors’ calculations using 2021 SHED sample.

The estimates produced in the counterfactual analysis (Figure 4) can be thought of as a the lower-bound of increased knowledge captured from removing "don't know". Some of the correct responses we attribute to guessing may, in fact, reflect true knowledge. (This would be represented in Figure 4 as the lighter orange portion of the bars shrinking, and the darker orange portion increasing). The half of the SHED sample who did not receive the "don't know" option did have higher shares who answer correctly than the estimated increase due to purely guessing, indicating that individuals had some knowledge about each question. Since the increase in the share correct were larger for women for all three financial literacy questions, removing the "don't know" option does reduce the initial gender gap.

Conclusion

We document that when the "don't know" option is removed from the set of responses to financial literacy survey questions, the share of individuals that select the correct answer increases. Women experience larger increases in the share correct, compared to men. However, a gender gap still exists for two financial literacy questions even when the "don't know" answer option is removed. We conclude that the gendered differences in answering this set of financial literacy questions is related to factors such as confidence and motivation in answering the questions as well as true financial literacy knowledge.

There is a tradeoff between the magnitude of the financial literacy gender gap and a tighter estimate of financial literacy. Using the survey question version that includes the "don't know" option could introduce a gender bias since there is evidence that women are less motivated to answer the questions. The results of our decomposition analysis suggest that if one's focus is measuring gender gap in financial knowledge, consider using the survey questions without "don't know" response option. However, the removal of the "don't know" answer choice could lead to more guessing. There is information in the don't know responses, but the absence of them leads to inflated correct shares.

References

Bhutta, Neil, Jacqueline Blair, and Lisa Dettling (2021). "The Smart Money is in Cash? Financial Literacy and Liquid Savings Among U.S. Families," Finance and Economics Discussion Series 2021-076. Washington: Board of Governors of the Federal Reserve System, https://doi.org/10.17016/FEDS.2021.076.

Board of Governors of the Federal Reserve System (2022). "Economic Well-Being of U.S. Households in 2021," https://www.federalreserve.gov/publications/files/2021-report-economic-well-being-us-households-202205.pdf.

Bucher-Koenen, Tabea, Rob J. Alessie, Annamaria Lusardi, and Maarten Van Rooij (2021). "Fearless woman: Financial literacy and stock market participation." No. w28723. National Bureau of Economic Research.

Hogarth, Jeanne M., and Marianne A. Hilgert (2002). "Financial knowledge, experience and learning preferences: Preliminary results from a new survey on financial literacy." Consumer Interest Annual 48, no. 1: 1-7.

Kleinberg, Katja B., and Benjamin O. Fordham (2018). "Don't know much about foreign policy: Assessing the impact of "don't know" and "no opinion" responses on inferences about foreign policy attitudes." Foreign Policy Analysis 14, no. 3: 429-448.

Krosnick, Jon A., and Stanley Presser (2010). "Question and questionnaire design." In J. D. Wright & P. V. Marsden (Eds.), Handbook of Survey Research (Second Edition). West Yorkshire, England: Emerald Group.

Lusardi, Annamaria, and Olivia S. Mitchell (2008). "Planning and financial literacy: How do women fare?" American Economic Review 98, no. 2: 413-17.

Lusardi, Annamaria, and Olivia S. Mitchell (2011). "Financial literacy and planning: Implications for retirement wellbeing." No. w17078. National Bureau of Economic Research.

Lusardi, Annamaria, and Olivia S. Mitchell (2014). "The economic importance of financial literacy: Theory and evidence." Journal of Economic Literature 52, no. 1: 5-44.

Mondak, Jeffery J., and Mary R. Anderson (2004). "The knowledge gap: A reexamination of gender-based differences in political knowledge." The Journal of Politics 66, no. 2: 492-512.

Anna Tranfaglia, Alicia Lloro and Ellen Merry (2024). "Question design and the gender gap in financial literacy," FEDS Notes. Washington: Board of Governors of the Federal Reserve System, January 02, 2024, https://doi.org/10.17016/2380-7172.3415.

Disclaimer: FEDS Notes are articles in which Board staff offer their own views and present analysis on a range of topics in economics and finance. These articles are shorter and less technically oriented than FEDS Working Papers and IFDP papers.